Long Island Financial Adviser Ordered To Pay $7M To Clients

Pace Haub Law Professor Jill I. Gross, an expert in securities arbitration, is featured in Newsday’s coverage of the more than $7 million in FINRA arbitration awards issued against A.G. Morgan Financial Advisors. Speaking about the role of regulators when repeated investor complaints arise, Professor Gross explains: “A number of disputes or complaints can lead the SEC and other regulators to shut down the brokerage or take other disciplinary steps.”

Nonprofit News Outlets Are Often Scared That Selling Ads Could Jeopardize Their Tax-Exempt Status, But IRS Records Show That’s Been Rare

Dyson Professor Katherine Fink pens an op-ed for The Conversation examining why many nonprofit news organizations avoid selling advertising, despite IRS records showing that fears over tax penalties or threats to nonprofit status are largely unfounded. Drawing on interviews with nonprofit newsroom leaders and an analysis of hundreds of IRS filings, Professor Fink finds that advertising revenue is both more permissible and less risky than many assume, even as political pressures under the Trump administration have made some nonprofits more cautious.

How Prosecutorial Incompetence Doomed The James Comey Case

In The Hill, Pace Haub Law Professor Bennett L. Gershman published a detailed commentary on how prosecutorial failures derailed the federal cases against former FBI Director James Comey and New York Attorney General Letitia James, drawing on his leading treatise Prosecutorial Misconduct to outline the constitutional and procedural breakdowns that undermined the prosecutions.

Op-Ed | Will Eric Adams be recharged for bribery and corruption?

Pace Haub Law Professor Gershman also wrote an op-eds for amNewYork: examining whether Mayor Eric Adams could be recharged for bribery and corruption.

Colleges Ease The Dreaded Admissions Process As The Supply Of Applicants Declines

The Hechinger Report’s recent story on how colleges are easing the admissions process as the supply of applicants declines—featuring Pace—was picked up by The Los Angeles Times.

A Wrecking Ball Is Coming For America’s Nursing Workforce. Stop It | Opinion

College of Health Professions Professor Michele Lucille Lopez writes a piece in Lohud examining how federal loan-limit changes threaten the graduate nursing pipeline. Professor Lopez explains that reclassifying advanced nursing programs as “non-professional” reduces borrowing limits, making graduate education less accessible and potentially worsening shortages of nurse practitioners and nurse educators.

Pace University's New AI Degree Aims To Stay Ahead Of Tech Job Turmoil

Lohud visited Pace’s Pleasantville campus this week to learn more about Westchester’s first Bachelor of Science in Artificial Intelligence, launching in Fall 2026. Interim Seidenberg Dean Li-Chiou Chen said the new program is designed to “stay ahead of the curve” as AI reshapes the tech workforce, offering students a rigorous foundation in computer science and math before advancing to specialized coursework in neural networks, machine learning, language processing, and AI ethics.

The Fed's Not Ready to Channel Greenspan in Betting on Tech Boom

Bloomberg leads the week, featuring Pace University’s Fed Challenge Team in its Economics Daily Newsletter after winning the 22nd Annual National College Fed Challenge—an extraordinary national achievement. Pace topped finalists Harvard College and UCLA.

Making an Impact at The Associated Press: Q+A with Liseberth Guillaume ’25

From mastering digital media tools to reporting stories across New York City, Liseberth Guillaume ’25 is putting her Pace training to work at The Associated Press.

Liseberth Guillaume

Class of 2025

MA in Communications and Digital Media

Why did you choose to pursue communications and digital media as a course of study?

I have worked in healthcare for over seven years, holding both clinical and operational roles. After graduating, I was recruited to work at The Associated Press in Elections Operations. That experience opened my eyes to the world of news and media, and I became fascinated by it. I knew this was the industry I wanted to grow in, but I also understood how quickly it changes. Pursuing this degree provided me with the core skills I needed to establish a strong foundation.

Why did you choose to enroll in the MA in Communications and Digital Media at Pace?

As I searched for programs that aligned with my interest in both media and operations, Pace stood out. The Communications and Digital Media program allowed me to explore both tracks in a way that perfectly supported my career goals. Courses such as Effective Speaking helped me grow as a communicator, while my media-focused classes expanded my understanding of AI in media and the role of nonprofit news organizations.

Tell us more about your roles as both an assistant operations manager in The Associated Press’s Elections Department and an intern at the AP New York news desk and how your work is meaningful to you.

At AP Elections, I work in an internal and customer-facing operations role. I help connect teams, such as our Decision Desk, Tabulation, Technology, Revenue, and Operations, so that information and planning stay aligned leading into election nights. My role supports the structure behind AP’s results reporting and helps ensure clients receive clear and accurate updates.

At the New York news desk, I am developing my journalism skills through writing, research, and field reporting. My solo bylines have included the St. Patrick’s Cathedral immigrant mural unveiling, the Grand Central subway scent story, and court coverage involving Sean “Diddy” Combs. I also produced a video story on the holiday-themed subway scent campaign. In addition, I supported coverage of Hurricane Melissa by helping connect reporters with Haitian and Caribbean diaspora communities. Further, I contributed to team coverage of the 24th anniversary of the 9/11 attacks and Prime Minister Netanyahu’s appearance at the United Nations.

The combination of hands-on practice and the freedom to shape my coursework in the MA in Communications and Digital Media program has made me feel prepared for my role at The Associated Press.

How have your studies prepared you for your professional roles?

My studies have equipped me with both practical skills and the flexibility to focus on what I want to do in the long term. The program allows me to choose courses that support my goals, and those classes align directly with the work I do at AP. For example, I am currently taking a digital video field production class, and the tools and techniques I’m learning in that course have helped me complete a recent video assignment for the newsroom with confidence. The combination of hands-on practice and the freedom to shape my coursework has made me feel prepared for my role.

What would you like to do upon graduation/what are your career goals?

God willing, I want to keep growing in the news and media industry. This program and my work at AP have shown me how much journalism and operations work together, and I want to continue building experience on both sides. My goal is to grow into a global news leader that helps create space for communities often overlooked and ensures their stories are told with accuracy, care, and understanding.

What advice would you like to give to current students?

I would encourage students to find a mentor in their field of interest. I have a mentor at AP who has helped me grow more confident and reminded me that I always bring something valuable to the table, whether it is my experience or my perspective. Having someone who believes in you and guides you can make a real difference, so I would tell students to find that one person who can support them throughout their journey. I would also say to stay curious and open to taking on different roles. You never know where an opportunity might lead you later on.

The Faculty Powering Student Discovery

Great research starts with great mentors. Meet the 2024 and 2025 recipients of the Faculty Undergraduate Research Mentor Award.

Every year, the Center for Undergraduate Research Experiences (CURE) holds the Fall Undergraduate Research and Creative Inquiry Presentation Series—a virtual showcase of original research by Pace students who received summer undergraduate research awards. Guided by the mentorship of dedicated faculty, these presentations offer students an opportunity to hone their communication skills, share their findings, and engage with the Pace Community as rising researchers.

In 2023, a Pace alumnus attended the event and was struck not only by the caliber of student research, but also by the impact of faculty mentorship on the students. Moved by what he saw, the alumnus made a generous gift to recognize the dedication of these mentors. Assistant Provost for Research Maria Iacullo-Bird in consultation with the CURE Faculty Advisory Board transformed that gift into the Faculty Undergraduate Research Mentor Award, a new award to honor faculty who have demonstrated exemplary mentoring in guiding undergraduate research at Pace. The Faculty Advisory Board developed the call for self-nominations and conducted the award review.

Since the establishment of the award, two cohorts of faculty have been honored: Adrienne Kapstein and Sid Ray in 2024; and Eric Brenner, Nancy Krucher, Elmer-Rico Mojica, and Christelle Scharff in 2025.

Meet the faculty mentors helping students turn questions into research-based inquiry, and resaerch outcomes into real-world change.

2024 Recipients

Adrienne Kapstein, MFA

Adrienne Kapstein is an associate professor and program head of the International Performance Ensemble (IPE) in the newly launched BFA in Performance Making. As both an artist and educator, she specializes in collective creation (also known as devising) and brings this approach into the classroom. Over the past 12 years as full-time faculty at Pace, she has integrated more than 35 students and alumni into 12 artistic projects across 3 countries. Kapstein’s theatrical work has been presented at Lincoln Center, Soho Rep, New Victory LabWorks, and internationally in Canada, Scotland, Ireland, Romania, Croatia, and China.

Known for her collaborative approach to performance, Kapstein brings that same energy to student research. “For me, mentoring is another kind of collaboration,” she explains. “There is a give and take between mentor and mentee, and oftentimes the lines blur as to who is leading whom. Although I have more experience, and I am excited to share it, there are many moments when the student’s fresh eye or alternative perspective gives me new insight. Mentoring is reciprocal, nourishing, and energizing.”

That collaborative spirit is exactly what earned her the faculty mentorship award. “Receiving the inaugural Faculty Undergraduate Research Mentor Award was a great honor and further confirmation of how important Pace values and supports undergraduate research,” says Kapstein.

Sid Ray, PhD

Dyson College of Arts and Sciences, English

Sid Ray is a professor of English and women’s and gender studies whose research focuses on Shakespeare and other early modern playwrights, transhistorical dramaturgy, and performance. She is the author of two monographs, three edited collections, and was a co–principal investigator on The Ground Beneath Our Feet, a place-based research initiative supported by a National Endowment for the Humanities Initiative Grant. Ray brings scholarly depth and cultural inquiry into the classroom and received the Kenan Award for Excellence in Teaching in 2016.

“Mentoring students in this hyper-focused way and scaling it up has been deeply rewarding,” Ray says. “It empowers students, giving them authority over their ideas, and life skills for accessing and presenting knowledge in their careers after Pace. It also teaches us the importance of place-based, experiential education.”

For Ray, receiving the award was an honor—but the opportunity to mentor students means even more. “I was deeply honored to receive the Undergraduate Research Mentor of the Year award in 2024,” she says. “Working with students, acting as their research assistant to help them find gems of information and knowledge that add to our understanding of the ground beneath our feet, that tell those untold stories, has been a great joy.”

2025 Recipients

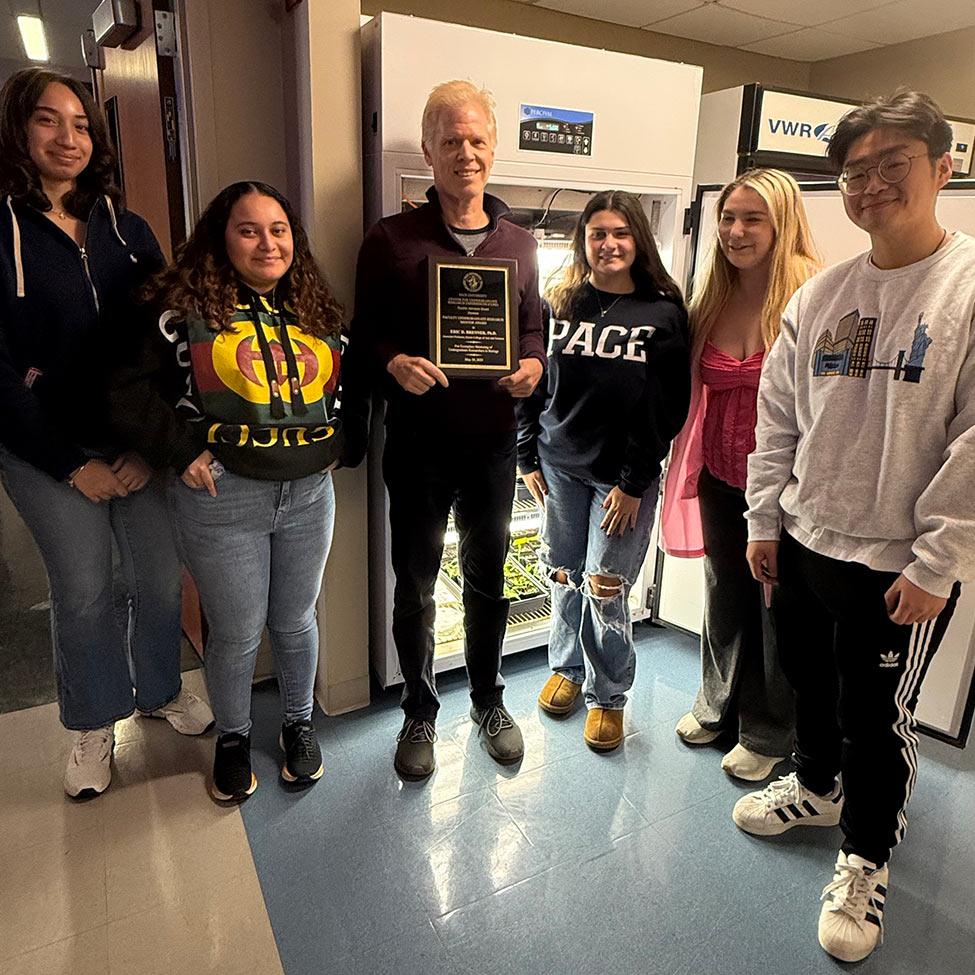

Eric Brenner, PhD

Dyson College of Arts and Sciences, Biology

Eric Brenner is an associate professor in the biology department, with areas of plant science expertise including pathogen resistance, population biology, signaling, and evolution. As part of his current research on complex plant behaviors, he developed Plant Tracer, a software tool that tracks and characterizes plant movement from time-lapse movies—a tool now used for teaching and research in universities, high schools, and middle schools. He is co-founder of the Society for Plant Signaling and Behavior, and his work has been featured in several periodicals, including The New York Times, The New Yorker, and Science Magazine.

Brenner’s passion for biology and teaching is deeply intertwined. “Teaching our Pace undergraduate students is not just very important to me—it is truly a personal mission,” he says. “These undergraduates are the driving force behind my research program, and they represent the next generation of science.”

For Brenner, that mission includes preparing students to meet urgent global challenges. “Educating students about plant care is essential for food security.” He goes further, saying, “Plants are fundamental to life on our Earth, teaching the next generations of scientists to manage our environment and food sources is critical to survival.”

Nancy Krucher, PhD

Dyson College of Arts and Sciences, Biology

Nancy Krucher has taught pre-med courses at Pace for 25 years, including general biology, molecular and cellular biology, and biochemistry. Her research focus has been on mentoring 90 Pace undergraduate students in novel cancer research projects supported by 6 grants from the National Cancer Institute of the National Institutes of Health, totaling 1.8 million dollars since 2002. At present her research efforts are focused on designing strategies to treat pancreatic, colon, and skin cancers using new combinations of targeted therapies.

Krucher’s own passion for research began as an undergraduate working in a muscular dystrophy lab, an uncommon opportunity for undergraduates at the time. “As a first-generation college student, scientific research was a complete mystery to me when I entered college,” she says. “I was hooked on research after my undergraduate research experience and now love to share that excitement for research with Pace undergraduates.”

That passion has evolved into a deep commitment to mentoring the next generation of researchers. According to Krucher, “My interaction and mentorship of undergraduate students in my research laboratory is the most important and rewarding part of my work as a professor.”

Elmer-Rico Mojica, PhD

Dyson College of Arts and Sciences, Chemistry

Elmer-Rico Mojica is a professor of chemistry and physical sciences at Pace University. Since 2012, he has mentored more than 100 undergraduates at Pace and his students have earned internal research grants, presented at conferences nationwide, won best paper and poster awards, and contributed to 36 peer-reviewed publications. Under his guidance, 34 students have secured internal grants, and many have gone on to participate in Research Experiences for Undergraduates (REUs), attend graduate school, or pursue medical degrees. As director of the Collegiate Science and Technology Entry Program, he leads a growing cohort of underrepresented and low-income STEM majors and helped secure the program’s renewal for another 5 years, expanding support for 40 students.

Grounded in the motto Opportunitas, Mojica views mentorship not just as teaching, but as transformation. “Mentoring undergraduate students is among the few opportunities that afford extended one-on-one teaching. It’s where the impact is deepest, it’s the purest form of teaching,” he says.

For Mojica, the recognition of this award is a reflection of every student who has walked through his lab doors. “This award isn’t just about me,” he reflects. “It’s about every student who spent time in the lab, who nervously presented their first poster, who realized for the first time that they belonged in science. They’re the reason I mentor.”

Christelle Scharff, PhD

Seidenberg School of Computer Science and Information Systems, Computer Science

Christelle Scharff is a professor of computer science, associate dean, and director of Pace’s AI Lab. Her current work centers on applied and generative artificial intelligence (AI), including the development of AI models for creativity, fashion, and social good.

Scharff has led projects on machine learning, global software engineering, and mobile innovation, with more than 30 papers published in these areas. Her research has been supported by the National Science Foundation, IBM, Microsoft, VentureWell, and Google. She leads strategic initiatives in AI education and research, interdisciplinary collaborations, and international partnerships. She has also served twice as a U.S. Fulbright Scholar in Senegal.

Scharff has diligently worked as a researcher and mentor at Pace, helping students gain first-hand experience tackling the real-world problems of tomorrow. “For me, mentoring is about empowering students to think critically, explore new ideas, and grow into contributors to knowledge,” says Scharff. “I love witnessing their evolution throughout the research journey.” She goes on to say, “Receiving the 2025 Faculty Undergraduate Research Mentor Award is a true honor.”

Check out the CURE website at Undergraduate Research Experiences for more information and learn about the 2026 Faculty Undergraduate Research Award Call for Nominations.

More from Pace

Following a national search, Pace University has announced the appointment of Alison Carr-Chellman, Ph.D., as its next provost and executive vice president for academic affairs. She will begin her tenure on January 20, 2026.

The Pace University Federal Reserve Challenge team has been named the national winner of the 22nd Annual College Fed Challenge, the Federal Reserve recently announced.

The Elisabeth Haub School of Law at Pace University, in partnership with the North America Committee of the Campaign for Greener Arbitrations (CGA-NA), proudly hosted a special New York Arbitration Week program, titled “Greener Arbitration: Insights from the Next Generation of Legal Scholarship.”