Eight In-Demand Careers in Fintech: High-Growth Jobs in Finance and Technology

Explore high-paying fintech careers and the skills employers want, and learn how the right education can prepare you for success in this growing field.

Fintech is redefining the way people interact with money. Mobile payments, digital banking, and cryptocurrency have changed everyday transactions, and emerging technologies continue to push the industry forward. As financial services evolve, companies need professionals who can develop new tools, improve security, and make financial systems more efficient.

If you’re considering a career in fintech, you’ll find strong salaries, job stability, and opportunities to work at the cutting edge of finance and technology. This guide explores high-demand finance and technology jobs, the skills employers value, and the best ways to prepare for long-term success in the field.

What is Fintech?

Fintech refers to the technologies and systems that improve how people and organizations manage money, assess risk, and make financial decisions. These innovations power mobile payments, automate investment platforms, support financial reporting, and drive smarter business operations.

Fintech is also reshaping industries beyond banking and investment. For example, manufacturing firms use digital payment platforms to modernize vendor transactions, and logistics companies rely on financial data systems to manage global supply chains. These applications require professionals who can lead transformation efforts, combining financial strategy with applied technology.

The industry has experienced rapid expansion, and projections indicate significant growth ahead. The Boston Consulting Group estimates that the global fintech market will reach $1.5 trillion in revenue by 2030, representing a fivefold increase from today. This growth is fueled by advancements in artificial intelligence, the adoption of blockchain technology, and the push to expand financial services to billions of unbanked and underbanked individuals worldwide.

Increased customer demand is also shaping fintech careers. A World Economic Forum study found that 51% of fintech companies cite strong consumer interest as the primary driver of their growth. With businesses and individuals seeking faster, more transparent, and digitally integrated financial solutions, professionals with expertise in both finance and technology are more valuable than ever.

Because fintech extends beyond startups and digital banks, careers in this field are not limited to tech-focused roles. Traditional financial institutions, consulting firms, and corporations need professionals who can lead digital transformation, manage financial strategy, and develop innovative solutions for evolving markets. Whether you’re interested in data analysis, risk management, product development, or compliance, fintech offers a variety of paths to explore.

Career Outlook for Fintech Jobs

Fintech is expanding quickly, and companies need skilled professionals to support its growth. Hiring in the sector has remained strong, with mid-sized fintech firms increasing their workforce by nearly 13% in a single quarter, even as job growth in other industries slows. This rapid expansion is fueling demand for experts in data analysis, compliance, software development, and cybersecurity.

Salaries in fintech are also highly competitive. A financial software engineer can earn more than $200,000 per year, while fintech product managers can command salaries well over $250,000, depending on experience. Compared to traditional finance roles, fintech positions often come with higher earning potential, particularly for professionals with strong technical skills.

While the major fintech hubs of New York, London, and San Francisco continue to experience high demand, opportunities are growing in cities like Singapore, Berlin, and Toronto, where financial services firms continue to invest in digital transformation. Even traditional banks and investment firms are expanding their fintech teams, focusing on areas like digital payments, automated investing, and AI-driven financial advisory services.

How to Prepare for a Career in Fintech

Success in fintech depends on a combination of financial knowledge, technical skills, and industry experience. Some roles require programming expertise, while others focus on regulation, data analysis, or product development. No matter the career path, building the right foundation through education, certifications, and networking can help you stand out in a competitive finance and technology jobs market.

Earn the Right Degree

The MS in Financial Operations and Technology (Fintech) at Pace University is a STEM-designated program that integrates financial theory with practical applications in technology. The curriculum prepares graduates for roles in securities trading, portfolio management, banking, payments, and financial services innovation. Located in the heart of New York City, this program gives students direct access to major financial institutions through networking events and internship opportunities.

Other relevant degrees may include:

- Bachelor’s or Master’s in Finance – Ideal for roles in financial analysis, risk management, and compliance

- Bachelor’s or Master’s in Computer Science – Essential for software engineering, blockchain development, and data science roles

- MBA with a Fintech Focus – Helps bridge the gap between business strategy and financial technology

Gain Industry Certifications

Certifications can demonstrate expertise in specialized areas of fintech and make you a more competitive job candidate. Some of the most recognized certifications include:

- Chartered Financial Analyst (CFA) – Ideal for professionals in investment management, risk analysis, and quantitative finance

- Certified Financial Risk Manager (FRM) – Focuses on risk management within financial institutions

- FinTech Industry Professional (FTIP) – Covers blockchain, AI, and digital payments, specifically for fintech professionals

- Certified Blockchain Developer (CBD) – Demonstrates expertise in blockchain programming and smart contracts

- Certified Information Systems Security Professional (CISSP) – Valuable for cybersecurity roles in fintech

Develop Technical Skills

While not every fintech role requires programming expertise, many positions benefit from knowledge of the following:

- Programming languages – Python, Java, C++, Solidity (for blockchain)

- Data analytics tools – SQL, R, Hadoop, Spark

- Blockchain and cryptography – Smart contracts, distributed ledger technology

- Cloud computing – AWS, Microsoft Azure, Google Cloud (used for scalable fintech applications)

- Financial modeling and algorithms – MATLAB, Excel, machine learning for risk assessment

Gain Practical Experience

Employers look for candidates who can apply their skills in real-world settings. Internships, research projects, and case studies help bridge the gap between theory and practice. Students in Pace University’s MS in Financial Operations and Technology complete a case-based capstone course, providing hands-on experience in fintech applications.

Many financial firms and startups also offer fintech-specific internships. Companies like Goldman Sachs, JP Morgan, and Morgan Stanley recruit fintech talent for roles in trading technology, risk analytics, and payment systems.

Network and Build Industry Connections

Breaking into fintech often requires a strong professional network. Consider these strategies:

- Attend fintech conferences and meetups – Events like Money20/20, Finovate, and the MIT Fintech Conference provide networking opportunities with industry leaders.

- Join fintech professional groups – Organizations like the Global Fintech Association and local fintech meetup groups can help expand your connections.

- Leverage university career services – Pace University’s INSPIRE and ASPIRE programs help students and alumni secure internships and full-time roles.

- Engage on LinkedIn and industry forums – Following fintech thought leaders and engaging in discussions can help you stay updated on trends and opportunities.

Top 10 Careers in Fintech

Fintech careers span a range of industries, from banking and investment firms to technology startups and global corporations. Employers seek professionals who can develop secure payment systems, analyze financial data, ensure regulatory compliance, and create digital products that enhance user experience. Below are some of the most in-demand jobs in fintech, along with salary expectations, required skills, education requirements, and common industries hiring for these positions.

1. Financial Systems Manager

Oversees financial technology infrastructure, ensuring payment processing, risk management, and compliance systems function smoothly.

- Average Financial Systems Manager salary (New York City): $220,299

- Required skills: Financial software, risk management, compliance regulations, IT security, ERP systems

- Education requirements: Bachelor’s in finance, information systems, business administration, or related field; MS in financial operations and technology or MBA preferred

- Who’s hiring: Banks, insurance companies, large corporations

2. Fintech Product Manager

Leads the development of financial technology products, working closely with engineers, designers, and business teams.

- Average Fintech Product Manager salary (New York City): $258,248

- Required skills: Product lifecycle management, UX design, market analysis, regulatory knowledge

- Education requirements: Bachelor’s in business, finance, or computer science; MS in financial operations and technology or MBA with a fintech focus preferred

- Who’s hiring: Fintech startups, banks, tech companies developing financial applications

3. Compliance Officer

Ensures fintech companies follow financial regulations, prevent fraud, and maintain data security standards.

- Average Compliance Officer salary (New York City): $172,613

- Required skills: Anti-Money Laundering (AML), Know Your Customer (KYC), General Data Protection Regulation (GDPR), risk assessment, regulatory reporting

- Education requirements: Bachelor’s in finance, law, or business administration; master’s or compliance certifications (CAMS, CRCM) preferred

- Who’s hiring: Banks, payment processors, cryptocurrency firms, regulatory consulting firms

4. Banking Operations Manager

Manages backend processes supporting digital banking services, ensuring transactions are accurate and efficient.

- Average Banking Operations Manager salary (New York City): $164,504

- Required skills: Banking operations, risk management, compliance, financial reporting

- Education requirements: Bachelor’s in finance, business administration, or economics; MS in financial operations and technology preferred

- Who’s hiring: Retail banks, digital banks, financial institutions

5. Financial Software Engineer

Designs and develops software for digital banking, payment processing, and investment platforms.

- Average Financial Software Engineer salary (New York City): $209,125

- Required skills: Java, Python, C#, APIs, cybersecurity, cloud computing

- Education requirements: Bachelor’s in computer science, software engineering, or related field; fintech software certifications beneficial

- Who’s hiring: Banks, fintech startups, financial services firms, software development companies

6. Quantitative Analyst

Uses statistical models and data analysis to optimize trading strategies, assess risk, and develop financial algorithms.

- Average Quantitative Analyst salary (New York City): $282,429

- Required skills: Mathematics, Python, R, MATLAB, machine learning, financial modeling

- Education requirements: Master’s or PhD in quantitative finance, mathematics, statistics, or related field

- Who’s hiring: Investment banks, hedge funds, trading firms, financial institutions

7. Blockchain Developer

Builds and maintains blockchain-based applications for secure transactions, smart contracts, and digital assets.

- Average Blockchain Developer salary (New York City): $176,231

- Required skills: Blockchain frameworks (Ethereum, Hyperledger), Solidity, Python, cryptography, decentralized finance (DeFi)

- Education requirements: Bachelor’s in computer science, software engineering, or related field; blockchain certifications beneficial

- Who’s hiring: Fintech startups, cryptocurrency exchanges, investment firms, tech companies

8. Data Scientist

Analyzes large datasets to detect fraud, optimize lending decisions, and improve financial forecasting.

- Average Data Scientist salary (New York City): $194,095

- Required skills: Machine learning, statistical analysis, SQL, Hadoop, Spark, financial modeling

- Education requirements: Bachelor’s in data science, statistics, or computer science; master’s in analytics or financial engineering preferred

- Who’s hiring: Banks, credit card companies, risk management firms, fintech startups

*Note: All salary figures sourced from Glassdoor.com in March 2025. Salaries can vary, depending on factors such as employer, geographic location, experience, and education.

Your Future in Fintech Starts Here

Fintech is reshaping the financial world, and the demand for skilled professionals has never been higher. Banks, investment firms, and fintech companies need experts who can bridge the gap between finance and technology, optimize operations, and drive digital transformation. If you want a career where you can shape the future of banking, investing, payments, and beyond, fintech offers that opportunity.

The right education and experience can help you stand out in this competitive field. Pace University’s MS in Financial Operations and Technology provides the tools to succeed in roles ranging from financial strategy and risk management to product innovation and technology-driven investment services. Reach out today to find out how Pace can help you thrive as a fintech professional.

FAQ

What are some careers in fintech?

Fintech careers span a wide range of roles across industries. Professionals may work as product managers developing digital banking tools, financial strategists leading transformation at traditional firms, or compliance officers navigating new regulations. Other roles include data scientists, UX designers, and cybersecurity specialists. These jobs exist at fintech startups, consulting firms, and corporations undergoing digital transformation—not just in tech companies.

What is the highest-paying job in fintech?

Salaries vary by role, but fintech product managers are among the highest earners in the field. In New York City, experienced professionals in this role can earn over $250,000 annually, reflecting the demand for leaders who can align financial strategy with technology development.

Is fintech a good career?

Yes, fintech offers strong job growth, competitive salaries, and opportunities for advancement. As financial services continue to adopt new technologies, demand for skilled professionals remains high.

How do I break into fintech?

Start by earning a relevant degree, such as Pace University’s MS in Financial Operations and Technology. Gain skills in product management, programming, or data analytics, and seek internships or entry-level roles at fintech companies, banks, or consulting firms. Networking through fintech events and LinkedIn can also help.

Does fintech pay well?

Yes, fintech salaries are often higher than those in traditional finance, especially in roles involving software development, product management, and risk management. Many fintech professionals earn six-figure salaries with experience.

What degree do I need to get into fintech?

Degrees in finance, computer science, data science, or business administration are common in fintech. The MS in Financial Operations and Technology at Pace University provides a strong foundation for careers at the intersection of finance and technology.

Pace Haub Law’s National Trial League Team Advances to Spring Playoffs

The Elisabeth Haub School of Law at Pace University Trial Advocacy Program has once again demonstrated its strength with a top finish by its National Trial League (NTL) team. Competing across seven rounds throughout the fall semester, the team has secured a Top 4 finish and advanced to the spring playoffs. As the reigning NTL champions, this latest success continues the momentum of the Pace Haub Law NTL team.

The Elisabeth Haub School of Law at Pace University Trial Advocacy Program has once again demonstrated its strength with a top finish by its National Trial League (NTL) team. Competing across seven rounds throughout the fall semester, the team has secured a Top 4 finish and advanced to the spring playoffs. As the reigning NTL champions, this latest success continues the momentum of the Pace Haub Law NTL team.

This year’s team is composed of Skyler Pozo (3L), Maiya Aubry (3L), Alexa Saccomanno (3L), James Page (3L), Marina Di Leo (3L), Olivia Haemmerle (3L), Natalia Garcia Perez (3L), Samantha Diaz (3L), and Karolina Zimny (2L). They are coached by accomplished Pace Haub Law alumni and former advocacy standouts Joseph Demonte ’24, Matthew Mattesi ’24, and Liam Rattigan ’24, whose leadership and expertise have been instrumental in guiding the team through the demanding season.

“I am so proud to be part of this NTL team,” said NTL team member and Chair Executive Director of the Advocacy Program, Skyler Pozo (3L). “From August through November, we have put in countless hours preparing for each competition round. Last year, I was grateful to have been chosen to compete in the playoffs as a 2L and it is very rewarding for our hard work to pay off and make the playoffs again this year. Our coaches have dedicated a tremendous amount of time ensuring we are prepared for each round and we could not have had such a strong finish without their guidance.”

The National Trial League is an innovative, fast-paced online trial competition, that features 14 teams, divided into two conferences, competing in a format modeled after traditional sports leagues. Unlike traditional weekend tournaments, the NTL format provides law students with multiple opportunities across the semester to hone their trial advocacy skills in a competitive environment. Between August and November, teams participate in seven rounds, with advancement determined by win-loss record, point differential, and total points. At the end of the regular fall season, four teams move on to the playoffs to be held in the spring.

During this regular season, Pace Haub Law’s competitors earned several individual recognitions:

- Skyler Pozo – Best Advocate, Rounds 2 & 5

- James Page – Best Advocate, Round 1

- Marina Di Leo – Best Advocate, Round 4

- Samantha Diaz – Best Advocate, Round 6

"The advocates that competed this year are truly amazing," said NTL Coach and Pace Haub Law alumnus Joseph Demonte '24. Seeing their dedication pay off has been inspiring, and I could not be prouder of what they accomplished together." With their strong regular-season performance, the team now heads into the spring playoffs, which feature a round-robin semifinal format. The top two teams from those rounds will advance to compete for the championship title, while the remaining two will compete for third place.

“I am beyond proud of the hard work our NTL team has demonstrated over the last four plus months,” said Director of Advocacy Programs and Professor of Trial Practice Louis Fasulo. “The success of the Pace Haub Law team during the regular season, along with their coaches, shows their ongoing commitment to success coupled with their outstanding advocacy skills. I look forward to seeing them compete in the spring playoff rounds.” The four Pace Haub Law students competing in the NTL Spring Playoffs are Skyler Pozo, Maiya Aubry, James Page, and Marina Di Leo.

Press Release: Pace University Appoints Alison Carr-Chellman as Provost and Executive Vice President for Academic Affairs

Following a national search, Pace University has announced the appointment of Alison Carr-Chellman, Ph.D., as its next provost and executive vice president for academic affairs. She will begin her tenure on January 20, 2026.

Nationally recognized higher education leader brings more than 25 years of experience to lead academic strategies and student success at Pace

Following a national search, Pace University has announced the appointment of Alison Carr-Chellman, Ph.D., as its next provost and executive vice president for academic affairs. She will begin her tenure on January 20, 2026.

A highly regarded higher education leader and scholar, Provost Carr-Chellman brings more than two decades of academic and administrative experience, with a focus on academic excellence, student success, shared governance and innovation in higher education.

She most recently served as dean of the School of Education and Health Sciences at the University of Dayton, where she has led strategic growth across multiple disciplines, stewarded a $29 million budget, substantially increased external research funding, and championed initiatives that advanced equity, inclusion, and student outcomes. Under her leadership, the school improved its national ranking and achieved 100 percent placement rates for undergraduates in teacher education and health sciences.

“Provost Carr-Chellman stood out in this search for her strength as a scholar, her clear and confident communication, and her ability to build strong relationships with faculty and academic leaders,” said Pace University President Marvin Krislov. “She brings a strategic vision shaped by a genuine commitment to student learning and institutional progress. We’re excited for the leadership she will bring as Pace continues to strengthen its academic reputation, expand scholarly productivity and support the success of our faculty, students, and programs.”

The appointment comes during an exciting time at Pace, which is undergoing a transformation of its campus at 1 Pace Plaza in Lower Manhattan, significant investments to its Cyber range and artificial intelligence labs and curriculum – along with the addition of degrees in artificial intelligence, two master’s and a bachelor’s of science – and planned upgrades to its Center for Healthcare Simulation in Pleasantville, among many other initiatives designed to prepare students for the modern workforce.

Prior to Dayton, Carr-Chellman served as dean of the College of Education, Health and Human Sciences at the University of Idaho, and spent over two decades at Pennsylvania State University, where she held faculty and administrative roles, including department head and program chair, with a focus on instructional design, systems thinking and organizational change in higher education.

Provost Carr-Chellman, a widely published scholar who has authored seven books and more than 175 publications, has over 14,000 citations on Google Scholar, indicating high impact for her work. Her research centers on systems theory, instructional design, organizational transformation and learning technologies. She has also secured more than $8 million in external research funding as a principal or co-principal investigator.

“Pace has a unique mission rooted in access, excellence and opportunity, and that resonates deeply with me,” Provost Carr-Chellman said. “I’m excited to bring my experience in academic innovation, collaborative leadership and student-centered learning to a community so clearly dedicated to helping students excel and create lives they are proud of.”

She is a nationally and internationally recognized speaker, with presentations delivered in countries including China, Norway, Brazil, France, Japan, and Qatar. Her TED Talk, “Gaming to Re-engage Boys in Learning,” has been viewed more than 1.5 million times.

Throughout her career, Provost Carr-Chellman has been known for her collaborative leadership style, her focus on driving academic quality and her commitment to broadening access to high-impact learning. She has led major initiatives in program development, accreditation, experiential learning, online and hybrid education and community-engaged scholarship.

She also has extensive experience in strategic planning, budget management, cross-campus partnerships and leading complex academic organizations through periods of change. She earned her Ph.D. in instructional systems from Indiana University Bloomington and holds a Master of Science in instructional systems and a Bachelor of Science in education from Syracuse University.

As provost, she will serve as Pace University’s chief academic officer, overseeing academic strategy, faculty affairs, research development and major academic initiatives that advance the university’s academic excellence and student success across all campuses.

About Pace University

Since 1906, Pace University has been transforming the lives of its diverse students—academically, professionally, and socioeconomically. With campuses in New York City and Westchester County, Pace offers bachelor, master, and doctoral degree programs to 13,600 students in its College of Health Professions, Dyson College of Arts and Sciences, Elisabeth Haub School of Law, Lubin School of Business, Sands College of Performing Arts, School of Education, and Seidenberg School of Computer Science and Information Systems.

Elisabeth Haub School of Law at Pace University and the Campaign for Greener Arbitrations – North America Committee Spotlight Emerging Scholarship in Sustainable Arbitration at New York Arbitration Week

The Elisabeth Haub School of Law at Pace University, in partnership with the North America Committee of the Campaign for Greener Arbitrations (CGA-NA), proudly hosted a special New York Arbitration Week program, titled “Greener Arbitration: Insights from the Next Generation of Legal Scholarship.”

The Elisabeth Haub School of Law at Pace University, in partnership with the North America Committee of the Campaign for Greener Arbitrations (CGA-NA), proudly hosted a special New York Arbitration Week program, titled “Greener Arbitration: Insights from the Next Generation of Legal Scholarship.” The event, which took place on November 17 at Pace University’s downtown campus and online, spotlighted innovation, sustainability, and greener arbitration practices in international arbitration. The program brought together leading practitioners, academics, and emerging voices in the field for a forward-looking discussion on how international arbitration forums can ensure that both the arbitration process and arbitration awards consider their environmental impact.

This special event also marked the conclusion of the inaugural CGA-NA and Pace Haub Law Greener Arbitration Writing Competition, which attracted 43 submissions from around the world. A highlight of the evening was the announcement of the competition’s first-place winner: Kenny Santiadi, an independent legal researcher from Indonesia, for his article titled “The Arbitrator’s Environmental Fiduciary Duty: A Normative Reconstruction of Legal Ethics in International Arbitration.” This year’s competition was funded by the Elisabeth Haub School of Law at Pace University, with support from almost 20 promotional partners.

“The purpose of the competition is to encourage and recognize excellent legal scholarship related to the mission of the CGA: to reduce the environmental impact of international arbitration and promote more sustainable arbitration practices,” shared Professor Jill Gross, Vice Dean for Academic Affairs and Professor of Law at Pace Haub Law. “Several common themes emerged in the competition which can be classified into two key areas: procedural innovations aimed at transforming the arbitration process to better align with global sustainability goals while maintaining fairness and efficiency, and substantive developments relating to environmental disputes. Each submission brought a significant amount of depth and breadth to the table, with unique perspectives on environmental consciousness in arbitration. While the high quality of the submissions greatly impressed the judging panel, Mr. Santiadi’s article emerged as the clear winner. His work is innovative and forward thinking, and as the winner of this inaugural competition, it will be published in the Pace Environmental Law Review.”

The jury for the selection of the winner was comprised of distinguished academics and practitioners, including: Professor Jill Gross, Professor Josh Galperin, Faculty Director of the Sustainable Business Law Hub, Associate Professor of Law, Pace Haub Law, Dr. Tamar Meshel, Associate Professor and CN Professor of International Trade, University of Alberta Faculty of Law, Lucy Greenwood, independent arbitrator and founder of the Campaign for Greener Arbitrations, William Crosby, Senior Vice President, Associate General Counsel, Managing Attorney, and LATAM Regional Coordinator at Interpublic, and Olivier André, Client Relationship Advisor, Freshfields; Co-Chair, CGA-NA.

Held on Day 1 of New York Arbitration Week 2025, the program amplified the role of sustainability within one of the most important annual gatherings for the global arbitration community. The program convened with updates on CGA initiatives from CGA Co-Chair Christine Falcicchio, Esq., and CGA-NA Committee Chair Olivier André, followed by an engaging panel moderated by Adam Weir, Associate, Freshfields; Secretary, CGA-NA Committee. Panelists throughout the program included Professor Jill Gross, Cherine Foty, Senior Associate, Covington & Burling; Global Co-Chair of the CGA, Adam Weir, and William Crosby, Interpublic Group. Together, these panelists explored key themes emerging from the 43 competition submissions and offered insights into the future of greener arbitration.

The Campaign for Greener Arbitrations seeks to raise awareness of the significant carbon footprint of dispute resolution. The Campaign addresses the need for environmentally sustainable practices in arbitration, and encourages all stakeholders (including counsel, arbitrators, parties to disputes, and institutions) to commit to the Campaign's Guiding Principles and reduce their carbon footprint when resolving disputes. Pace Haub Law, consistently ranked #1 in Environmental Law in the U.S. News specialty program rankings, has a long history of supporting legal scholarship in a wide array of topics related to environmental law. Pace Haub Law’s ADR Program also has focused on the intersection of environmental law and ADR, through its Environmental Dispute Resolution curriculum, Land Use Law Center which uses consensus-building techniques to assist municipalities in resolving land use disputes, and its Sustainable Business Law Hub, a think tank devoted to addressing global sustainability challenges through policy and research projects.

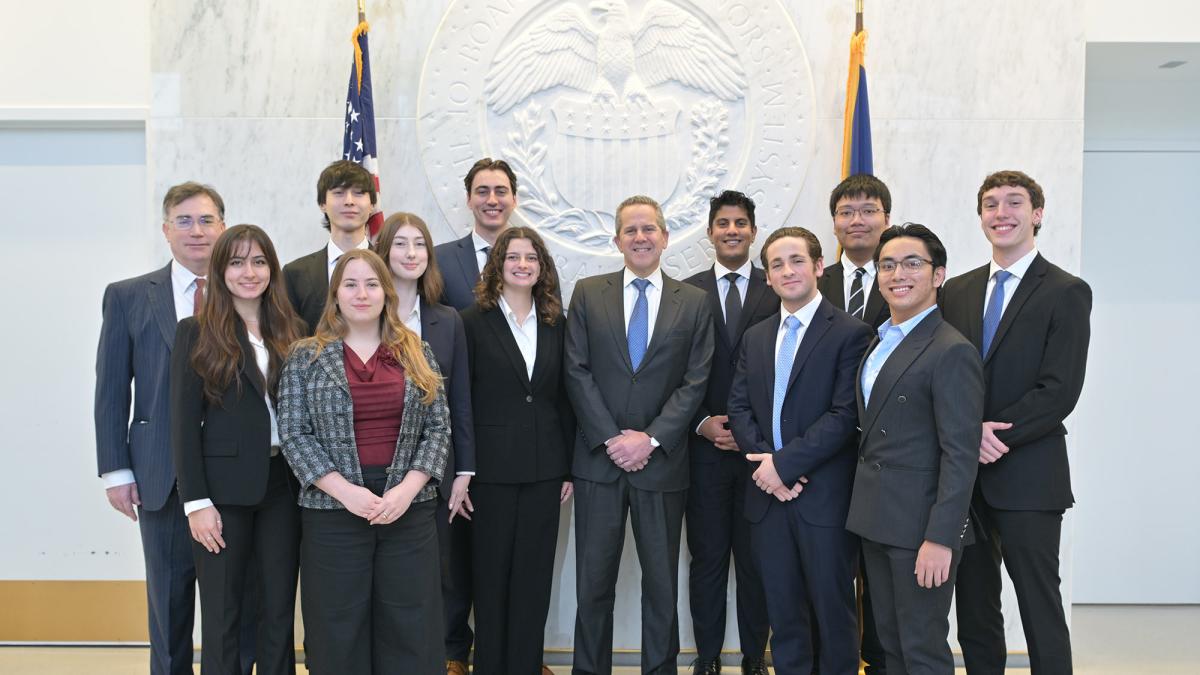

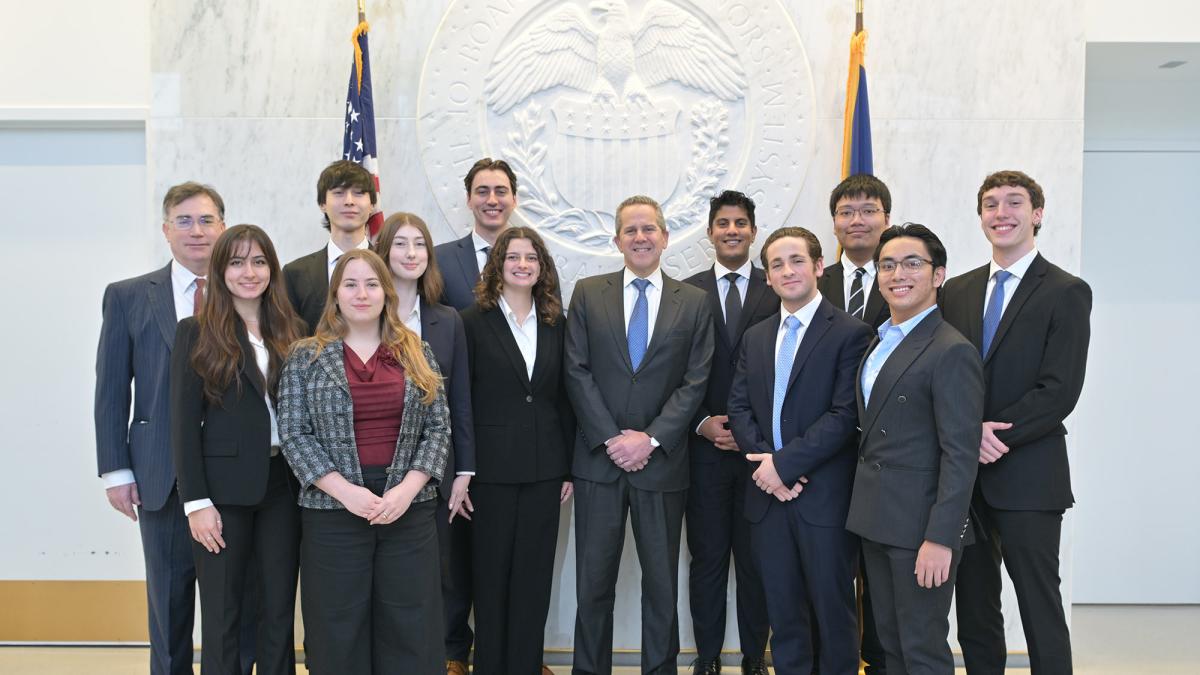

Press Release: Pace University Fed Challenge Team Wins the 22nd Annual National College Fed Challenge

The Pace University Federal Reserve Challenge team has been named the national winner of the 22nd Annual College Fed Challenge, the Federal Reserve recently announced.

Beats Harvard, UPenn, and others, marking Pace University’s sixth national College Fed Challenge title

The Pace University Federal Reserve Challenge team has been named the national winner of the 22nd Annual College Fed Challenge, the Federal Reserve recently announced.

The prestigious competition, which invites undergraduate teams to analyze economic conditions and propose monetary policy solutions, included 139 schools from 36 states, making this year’s field one of the largest in the program’s history.

The Pace University team advanced through one of the most competitive regional brackets in the country. In the New York region, Pace defeated New York University, Columbia University and Rutgers University, along with all other participating institutions, securing a decisive regional championship and earning a place in the national competition.

At nationals, Pace competed against top programs including Harvard College, Princeton University, University of Miami, University of Virginia and University of Michigan. In the final round, Pace earned the national championship, with Harvard placing second and UCLA finishing third. The University of Pennsylvania, University of Chicago and Davidson College received honorable mentions.

This achievement marks Pace University’s sixth national College Fed Challenge title, following wins in 2014, 2015, 2017, 2019 and 2021.

“This national championship is an extraordinary achievement for Pace University,” said Marvin Krislov, president of Pace University. “Our students demonstrated remarkable analytical skill, teamwork, and professionalism throughout the competition, and their success on the national stage reflects the strength of our academic programs and the power of experiential learning at Pace. We’re also deeply grateful to the dedicated faculty and staff whose mentorship and support made this success possible.”

The 2025 Fed Challenge team includes students from economics, business economics and computational economics programs: Suraj Sharma ’26, captain; Giancarlo Raspanti ’26, co-captain; Brooklyn Bynum ’26, co-captain; Gianna Beck ’28; Emina Bogdanovic ’28; Sheira Dery ’27; Gunnar Freeman ’26; Grace McGrath ’26; Oliver Ng ’27; Khan Mamatov ’27; Laura Melo ’27; Kristina Nasteva ’26; Harvey Nguyen ’26; and Alexander Tuosto ’26.

Months of preparation included economic research, mock presentations and intensive Q&A practice with faculty advisers. Sharma, the team captain, said Pace’s success reflected its work ethic and long hours of collaboration.

“Representing Pace University at the Federal Reserve and competing against the strongest economics programs in the country to win the national title for a record-breaking sixth time is something our whole team is incredibly proud of,” Sharma said. “This win is proof of the promise of opportunity Pace made to us years ago.”

Bynum said the experience pushed the team to grow in meaningful ways.

“The Fed Challenge pushed all of us to grow in ways we never expected — as economists, as teammates and as leaders,” said Bynum. “Competing on the national stage and winning the title was unforgettable, and I’m grateful for the chance to represent Pace alongside such a dedicated and talented team.”

Raspanti said the structure of the competition strengthened both analysis and teamwork.

“The competition strengthened both our analytical ability and the bonds we made with each other,” said Raspanti. “This isn’t just a team; it’s a family. Presenting alongside them in a national championship win is an honor I am truly grateful for.”

Faculty advisers and economics professors Gregory Colman, Ph.D., and Mark Weinstock, CBE, coached the team through each stage of the competition. Colman said he was impressed by the students’ preparation and precision.

“It’s a great achievement and a tribute to the hard work that the students put in, and I could not be prouder of them.”

Weinstock added that the Q&A is where the students truly excelled. “Our students excelled in the Q&A, demonstrating the depth of their understanding of the U.S. economy and monetary policy.”

The Fed Challenge is a signature element of experiential learning within Pace’s economics department, offering students hands-on exposure to economic analysis and policymaking. Alumni of the program have secured internships and full-time positions at institutions such as the Federal Reserve Bank of New York, JPMorgan, Goldman Sachs, Citibank and other major employers. Many return to mentor current competitors, creating a strong and supportive pipeline that strengthens the team year after year. Women made up half of this year’s national championship roster, continuing the department’s longstanding record of developing women leaders in economics.

“Winning two consecutive regional competitions and then capturing the national title is a clear testament to our students’ exceptional abilities and dedication,” said Anna Shostya, Ph.D., chair of the economics department. “Their success reflects not only their hard work and deep economic knowledge, but also the unwavering commitment of our faculty coaches, Professors Gregory Colman and Mark Weinstock, who prepare and mentor our students year after year.”

Earlier this year, John Williams, president of the Federal Reserve Bank of New York, visited Pace University to speak at an event hosted by the Pace Economics Society and Women in Economics, following an invitation from the Fed Challenge team captains.

About Dyson College of Arts and Sciences

Pace University’s liberal arts college, Dyson College, offers more than 50 programs, spanning the arts and humanities, natural sciences, social sciences, and pre-professional programs (including pre-medicine, pre-veterinary, and pre-law), as well as many courses that fulfill core curriculum requirements. The College offers access to numerous opportunities for internships, cooperative education and other hands-on learning experiences that complement in-class learning in preparing graduates for career and graduate/professional education choices.

About Pace University

Since 1906, Pace University has been transforming the lives of its diverse students—academically, professionally, and socioeconomically. With campuses in New York City and Westchester County, Pace offers bachelor, master, and doctoral degree programs to 13,600 students in its College of Health Professions, Dyson College of Arts and Sciences, Elisabeth Haub School of Law, Lubin School of Business, Sands College of Performing Arts, School of Education, and Seidenberg School of Computer Science and Information Systems.

Colleges Ease The Dreaded Admissions Process As The Supply Of Applicants Declines

The Hechinger Report features a major story on how colleges are easing the admissions process as the supply of applicants declines — and Pace University dominates the piece from start to finish. Reported entirely from Pace’s Pleasantville Campus, the story uses Pace as its primary case study, with a picturesque array of campus photos and the lead narrative following families on a Pace tour. It highlights Pace’s participation in New York State’s application-fee waiver month and its additional offer of $1,000 per year in financial aid for students who visit and enroll.

This College News Is Totally Changing the Game for High School Students

Dean of Admission Andre Cordon is featured prominently, explaining how Pace is removing barriers and simplifying the process for first-generation and working families. Families interviewed said the experience felt welcoming and more receptive than they expected — citing personalized welcome signage, an easy check-in process, and immediate access to admissions staff.

Everyone Says It’s Harder to Get Into College Than Ever Before. Guess Again.

Dean of Admission Andre Cordon is featured prominently, explaining how Pace is removing barriers and simplifying the process for first-generation and working families. Families interviewed said the experience felt welcoming and more receptive than they expected — citing personalized welcome signage, an easy check-in process, and immediate access to admissions staff.

Olivia Nuzzi, Once Linked To RFK Jr., Is Telling Her Story. The truth About 'Tell-Alls.'

USA Today turns to Dyson Professor Melvin Williams for perspective on the economics of political “tell-alls.” Professor Williams explains that memoirs chronicling the scandals and transgressions of political figures are often highly lucrative, especially when they center on affairs, misconduct, and personal drama—context that helps explain the enduring market for books that blur the line between politics, media, and entertainment.